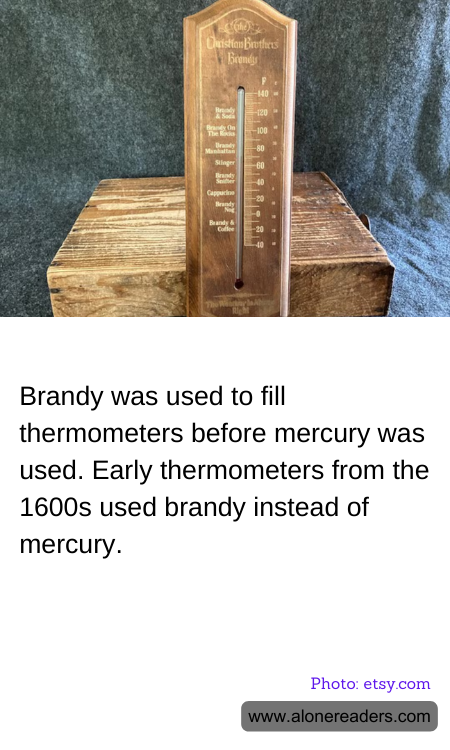

The history of thermometers is fascinating, illustrating a journey of scientific experimentation and discovery. Before the use of mercury in thermometers, other liquids were tested for their ability to measure temperature accurately and consistently. Notably, one of the earliest substances used was brandy. This choice was based on the clear visibility of brandet, as well as its ability to remain in a liquid state over a considerable range of temperatures.

During the 1600s, when the scientific revolution was in full swing, inventors and scientists sought more reliable methods to understand and measure the natural world. The development of the thermometer was crucial in this era, as it provided a way to quantify heat and cold. Early thermometers, known as thermoscopes, initially did not have a scale and were less precise. The inclusion of brandy was mainly because it expanded and contracted with changes in temperature, allowing for rudimentary temperature readings.

However, brandy was eventually phased out in favor of mercury for several reasons. Mercury proved to be a superior thermometric liquid because it has a high coefficient of expansion and remains in its liquid form across a wide range of temperatures, from -38.8 degrees Celsius to 356 degrees Celsius. Furthermore, mercury does not stick to the glass of the thermometer, providing more precise and reliable readings.

The switch from brandy to mercury enabled the development of more accurate and dependable thermometers. These innovations significantly impacted various fields, enhancing everything from meteorology to medicine. Despite mercury's toxicity, its use in thermometers continued until recent times when concerns about its environmental and health risks led to the adoption of safer alternatives like alcohol-based or digital thermometers.

Thus, the evolution from brandy to mercury in thermometers is a small yet profound example of how scientific tools undergo significant changes as our capacity for precision and safety improves.