In the realm of digital communication, network latency emerges as a critical factor shaping user experience and operational efficiency. Latency, in simple terms, represents the time delay experienced in a network during data transmission. It is the duration taken for a data packet to travel from its source to the destination and back. In an era where speed is synonymous with efficiency, high latency is often a bottleneck for productivity and seamless company operations. For applications requiring real-time processing, such as fluid dynamics or high-performance computing tasks, low latency is not just beneficial but essential. When latency spikes, application performance degrades, potentially leading to operational failures.

These terms, though often used interchangeably, have distinct meanings. Latency is the delay in data transfer, bandwidth is the volume of data that can be transmitted at any given time, and throughput is the actual rate of successful data delivery over a network. While latency and bandwidth are individual metrics, throughput is their cumulative result. For activities like live streaming or online gaming, low latency is crucial to avoid delays between actions and their digital representation. Conversely, high bandwidth ensures efficient file downloads regardless of latency. Thus, understanding the interplay between these elements is vital for optimizing network performance.

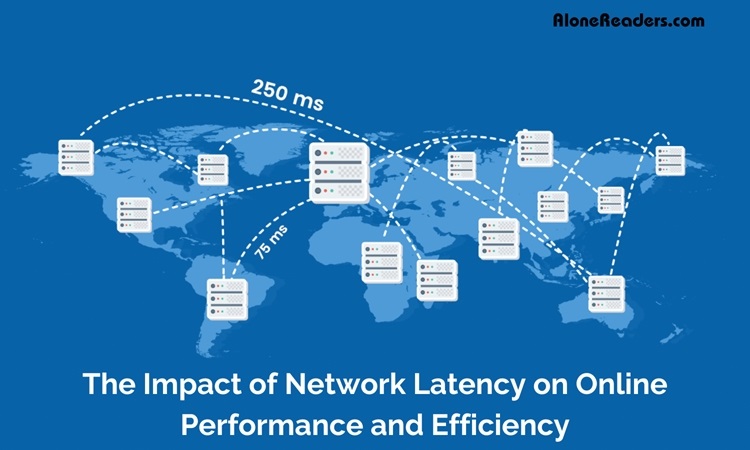

Network latency is influenced by several factors, including the physical distance between communication points, the quality of networking equipment, and the type of connection (e.g., satellite, fiber optic, or cable). Satellite connections like HughesNet, while covering vast distances, inherently suffer from higher latency due to the significant travel distance of data packets. In contrast, land-based connections, like fiber optics, typically offer lower latency.

In the business context, latency impacts not just immediate operational efficiency but also long-term strategic planning. The rise of the Internet of Things (IoT) and cloud-based solutions makes low latency indispensable. High latency can disrupt basic business functionalities, leading to inefficiencies and hindering the optimal performance of applications, especially those relying on real-time data, like smart sensors and automated production systems.

Acceptable latency thresholds vary by application. For most online activities, a latency under 125ms is adequate, but for high-performance tasks like gaming, latencies of 30-40ms are desirable. Latencies above 100ms, while manageable for non-streaming tasks, can lead to perceptible delays.

Reducing latency can be challenging, especially for satellite or certain mobile network operators (MVNOs). However, there are strategies to mitigate high latency:

In conclusion, understanding and managing network latency is crucial for both individuals and businesses in the digital age. By recognizing the nuances of latency, bandwidth, and throughput, and implementing effective strategies, one can significantly enhance online performance and operational efficiency.